Machine vision in intelligent debugging

Analysis, the practice of Lingxi machine vision in intelligent debugging of an enterprise!

Based on the transparent factory integrated equipment de Lingxi machine vision system, the practice in intelligent debugging of excavators

In the era of technological development, machine vision plays an important role in smart factories, which can effectively increase production capacity and improve product qualification rates. The machine vision system has high detection accuracy and can quickly obtain a large amount of information and process it automatically. It upgrades the "human eye + simple tool" detection mode to high-precision and fast automatic detection results.

Machine vision is widely used in all walks of life. Especially in the processing and production industry, machine vision plays an irreplaceable role. Because machine vision uses industrial cameras combined with intelligent software algorithms, it has multiple functions. For example: visual inspection of workpieces, measurement of workpiece dimensions, reading of barcodes/QR codes, obtaining the location of workpieces, etc. Using these functions, we have developed solutions suitable for various production and manufacturing links and have applied them in large quantities in practice.

However, in actual practice, the deployment of machine vision in factory production and quality inspection processes faces many challenges, resulting in a situation where even if machine vision is deployed, the expected functions cannot be achieved. For example: 1. The data does not leave the factory, but the model is developed in the cloud, resulting in the model not having suitable data for training; 2. The model is deployed on site, and the data is also on site, but there is a lack of artificial intelligence professionals in the factory to train it. The data is processed and the model is tuned. Therefore, additional professionals need to be arranged to go to the site to complete the task, which results in low efficiency and increased costs.

Based on practical problems. This article introduces an application that uses a transparent factory integrated machine to deploy machine vision at an industrial site to identify the operating position of the excavator. I hope that through the elaboration of this practice, everyone can understand a new product form and how to help the project site complete the model deployment, data annotation and optimization of the machine vision system. The entire process is implemented remotely.

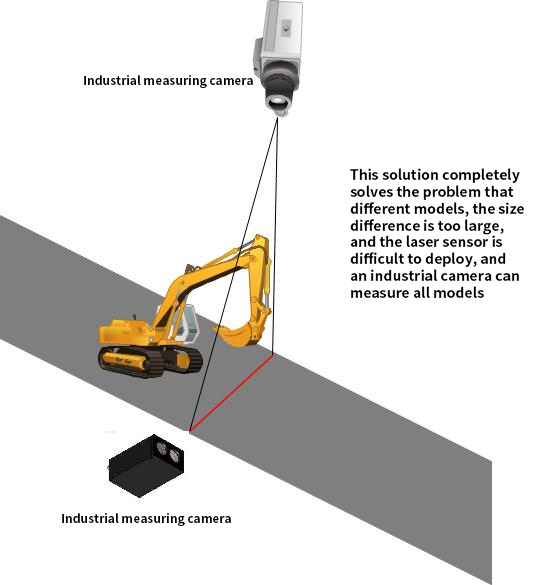

The requirements of this project are as follows: As shown in the figure below, it is necessary to test whether the excavator is deflected during operation (ie, to the left or to the right).

Our plan is as follows: deploy industrial inspection cameras at the starting and ending points of the test area, use machine vision technology, and photoelectric sensors. The photoelectric sensors detect when the vehicle reaches the end point, notify the industrial cameras to collect photos of the vehicle when it reaches the end point, and use machine vision technology to accurately measure the phase of the vehicle. The distance from the center point, and the offset is automatically calculated.

The difficulties in implementing this solution through machine vision are: 1. The industrial camera needs to be able to identify the edge of the monitoring area, the center point and the edge of the excavator outline. 2. The industrial camera is required to automatically identify the model of the excavator, so as to know the size parameters, operating speed parameters, etc. of the excavator. 3. It is necessary to be able to judge whether the excavator has deviated from the center point.

The specific implementation steps are as follows:

1. Mark the edges of the excavator and the area so that the machine vision recognition program can automatically identify the edges of the machine. We accomplished this by deploying an all-in-one machine on site and cameras at four locations around the excavator, front, rear, left, and right. We also deployed cameras at the starting point and end point of the track, and the data at the remote end marked the edge of the excavator and the edge of the track. step.

2. To identify the model of the excavator, this step is obtained by identifying the QR code of the fuselage, and combined with the marking in the first step to match the entire marked point with the actual size, so that the standard point and the actual size are matched The sizes can be matched one to one.

3. Mark the position of the edge before starting the excavator, and measure the distance between the edge and the area edge. When the excavator reaches the end point, measure the distance between the edge of the excavator and the areas on both sides again. Through comparison, the runtime offset can be calculated.

The entire deployment and debugging process is shown in the figure below: the camera is deployed on the track side, and the data is transmitted back to the all-in-one machine through the camera. The all-in-one machine is deployed at the industrial site. One all-in-one machine can carry 16 high-definition cameras, fully meeting the on-site requirements, and the data is collected. The all-in-one machine stores it locally, and the AI engineer accesses the all-in-one machine at the industrial site through the 5G network and VPN, so that real-time data annotation and model tuning can be performed. Model development is completed locally by the AI engineer, and after completion, it is deployed to the model training environment of the all-in-one machine. Conduct training and publish the mature model to the AI cloud for on-site updating and use.

Transparent factory all-in-one machine

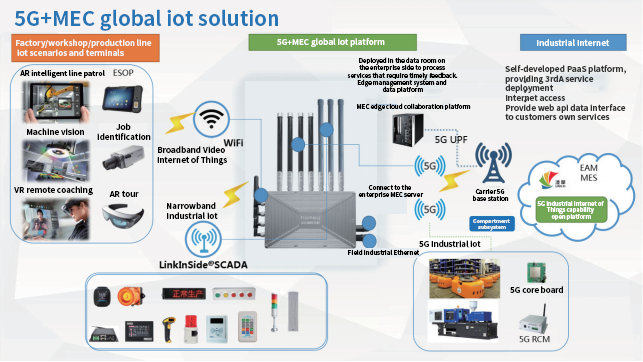

-Born for intelligent industrial interconnection . One machine to solve the intelligent interconnection of industrial sites

Go deep into the core pain points of the manufacturing industry (difficulty in integration, high operation and maintenance costs), and return to the core value of the manufacturing industry (improvement of OEE/OPE , MTTR downwards /MTBF upwards).

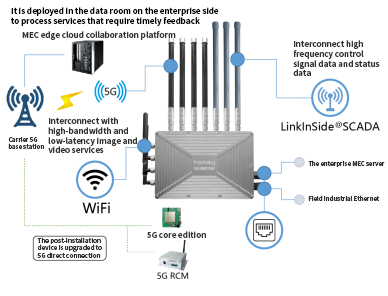

Industrial grade CPE

Integrated 5G+MEC | Operator network access certification

Built-in three major platforms

Industrial IoT Platform | Intelligent Manufacturing Platform | Edge Intelligence Platform

System architecture diagram

System architecture (shown in Figure 1 )

1. Industrial IoT platform

(1) Wireless SCADA: 8-channel full-duplex spread spectrum communication, millisecond-level delay, connecting short-byte, high-frequency, widely distributed collection and control terminals;

(2) Wi-Fi: External high-gain antenna with a coverage radius of up to 50m, used to connect to video interactive terminals. Can connect 32 terminals at the same time;

(3) 5G: Self-developed high-performance module, downlink 2Gbps, uplink 630Mbps, supports full network communication, and is used for on-site collaboration, edge-cloud collaboration, and on-site and cockpit collaboration.

2. Intelligent manufacturing platform

(1) Built-in Humei (Anden) system;

(2) Built-in transparent factory system;

(3) Support 3rd... intelligent manufacturing system deployment;

(4) Only one set of equipment is needed to solve the management of 5M1E, and it can be used out of the box;

(5) High-performance 4-core...CPU processing platform;

(6) Self-developed data optimization processing algorithm to ensure smooth operation of the system;

3. AI computing platform

(1) Machine vision off-duty alarm and equipment status push;

(2) Vibration monitoring predictive maintenance PHM;

(3) AGV visual navigation and forklift automatic driving;

(4) AOI quality inspection and surface defect detection algorithm;

(5) Machine vision operation standard detection and alarm, output statistics;

(6) 4-core high-performance processor;

(7) 8GB memory, 128GB system disk;

(8) Built-in 1TB solid-state drive, data is stored locally and files can be adjusted at any time;

(9) Self-developed containerized service deployment platform;

(10) Support 3rd...AI model deployment.

In this way, the on-site deployment and remote debugging of the machine vision system are realized through the all-in-one machine. In addition to the installation personnel coming to the site, the AI engineer does not need to go to the site during the whole process, which greatly improves the work efficiency of the AI engineer and completes the model through the all-in-one machine. Real-time tuning, on-site work efficiency has also been greatly improved.